Who Lied about Muslim Suicide?

Muslims, including Imams and Zakat Donors got scammed on Mental Health. It's important to understand how.

Author’s note: This is a combined article covering how Muslims were fooled into thinking being Muslim makes them twice as likely to attempt suicide. If you have already read parts one and two, start from the header “Certified error-free!” by scrolling down. If you are getting this by email, click “view entire message” to see it. If you have not read this series before, this article is all you need.

To subscribe to this newsletter covering Muslim Nonprofits, subscribe below.

“O Allah, show me the truth as truth and guide me to follow it. Show me the false as false and guide me to avoid it.”

-Dua of Umar (RA)

In 2021, JAMA (Journal of the American Medical Association) Psychiatry published a research letter with a shocking conclusion: Muslims are twice as likely to attempt suicide as people of other faiths or those who have no faith at all.

You probably had heard about this as the authors promoted their “research letter” aggressively in the news media at the time. The conclusion is still widely cited by Muslim mental health practitioners and used as zakat and other donation fundraising fodder for organizations like Maristan and Khalil Center, the leaders of which created this bombshell research. The idea that Muslims are more than twice as likely to attempt suicide is now accepted as a scientific fact within much of the Muslim community. It’s also not true.

I understand that many Muslim donors will be impressed that this was published in a journal like JAMA Psychiatry by well-known mental health leaders within the Muslim community. I urge you to keep an open mind to the possibility things in medical journals can be wrong, and Muslim non-profit leaders can brazenly lie to Zakat donors and Imams. If you don’t think these things can possibly be true, there would be no point in reading on or subscribing to this newsletter.

Much of this saga involves lying using statistics, but don’t let that intimidate you. I endeavored to write this article for people who don’t have statistical backgrounds, but you will need to be patient as we unwind this. By the end, I hope it will be plain.

It starts with an opinion poll

You are probably already familiar with opinion polls: the ones that ask a small sample, purporting to be representative of a larger population, questions asking things like if respondents prefer Democrats or Republicans. Well, our story begins with that kind of poll. The Institute for Social Policy and Understanding (ISPU) runs something called the “American Muslim Poll.” To produce this “American Muslim Poll,” ISPU hires a polling company to survey Muslims, Jews, and others on social and political views. ISPU then promotes the results, offers insights to the media, and then, naturally, collects zakat from the Muslim community because they believe this activity somehow constitutes “intellectual jihad.”

How does ISPU decide which questions to include in their poll? Well, at least in part, they sell questions to interested parties. In preparation for the 2019 American Muslim poll, it had sold a couple of questions to authors of what ultimately became the JAMA Psychiatry research letter (the ISPU Director of Research, Dalia Mogahed, is also credited as an author).

The poll

ISPU’s roles include some of the survey design (in consultation with a vendor and clients), fundraising, marketing, and promotion. The poll was conducted by Social Science Research Solutions (SSRS), which is rated a “C” pollster by FiveThirtyEight, on the low side (A+ is the highest rank) because of the organization’s 60% accuracy in predicting election results.

Margins of error

ISPU did a survey in 2019 to find out how many Muslims, Jews, and other people tried to kill themselves, 2,376 people in surveys of different groups. They found that 7.9% of Muslims, 5.1% of Protestants, 6.1% of Catholics, and 3.6% of Jews reported trying to kill themselves at some point, those were only of the people surveyed. But the number for Muslims is not necessarily worse than everyone else because of the margin of error, which means the actual number could be a little (or a lot) higher or lower. Remember, this is a small sample, and it does not perfectly represent the populations of people who follow these faith traditions.

ISPU’s numbers, when you incorporate their own margin of error means somewhere between 3-12.8% of all Muslims attempted suicide at some point, and somewhere between 0-11.2% of Jews attempted suicide at some point, this is because of a ±4.9% margin of error for Muslims and a ±7.6% margin of error for Jews. They didn't survey other religions separately as they did with Muslims and Jews, so they didn't provide “margin of error” information for other faiths, but if you calculate it yourself you will find all these numbers on suicide fall within the margins of error and thus don’t tell you anything.

The “research letter” does not use margins of error- but rather something called “p-value” to decide if the numbers mean something. Margins of error and p-value can be used to fool people into thinking worthless information is earth-shatteringly important. In some cases (and as I will show here) it may be easy to simply lie about the numbers to get the desired result.

ISPU didn't mention the suicide attempt data for Muslims in their report on the 2019 American Muslim poll as that would have been pointless. It became worth discussing once the numbers were massaged (or tortured).

When adjusting for demographic factors

The authors of the JAMA Psychiatry Research Letter achieved headlines based on the strength of this conclusion:

US Muslim adults were 2 times more likely to report a history of suicide attempt compared with respondents from other faith traditions, including atheists and agnostics. (Emphasis added)

How do you get a shocking headline-grabbing result from what used to be a survey result that showed you nothing?

The JAMA letter authors include an important qualifier that carries mountains of weight here, their conclusion that American Muslims are twice as suicidal as everyone else only happens “when adjusting for demographic factors.” This may beg the question: how did they “adjust” the numbers to come up with this result? The authors took numbers with no statistical significance and ran them through a computer program to run “regression analysis”- a technique to show relationships between variables. Only after the authors did this, they got the results that would otherwise be impossible to obtain.

Let’s Frankenstein the data

Yaqeen Institute Scholar and statistician Dr. Osman Umarji (writing through his University of California, Irvine affiliation) wrote to JAMA Psychiatry (his long-form reanalysis is here) and then posted a comment to the research letter after re-running the data. In it, he describes two principal critiques, which I will endeavor to simplify:

The first is that the authors started with nothing of value. There was nothing to report regarding the number of Muslims who attempt suicide and other faith groups, at least not from the ISPU data. Remember, you take the raw numbers, and they not only don’t show Muslims are twice as likely to attempt suicide, but they also provide you with no conclusions at all. There was no justification for doing a regression analysis in the first place. This is because it’s only going to result in torturing data so it can tell you what you want it to say.

The second criticism is that the result of this data torture (regression analysis) is a “suppressor effect”- an error that statisticians have known about for over 100 years. Dr. Umarji took the position that the result was false mainly because of the way the authors treated race, accounting for a major variable that did not exist.

Fun with race

The ISPU data combined with the JAMA author’s work portrayed an American Muslim community as more Non-Arab White than Asian.

The US Muslim Community, according to the JAMA authors (the ISPU report showed this somewhat differently) in 2019, was 26% black, 26% white, 24% Asian, 10% other, and 14% Arab.

The factors leading to why the JAMA author’s regression led to erroneous results were:

The reference group (regression analysis needs them) was non-Arab white, a group with a high rate of suicide attempts and likely overrepresented in the American Muslim Survey.

ISPU, in its 2019 report, excluded Asians completely from their “general population” survey because their sample size was too small for them to have enough confidence in their numbers. The Muslim survey did include Asians. Its odd a medical journal research letter used numbers on Asians that ISPU, which was in charge of the survey the JAMA authors relied on, explicitly said they themselves don’t have confidence in.

According to ISPU data from 2019, “Arab” is a race in the United States that is exclusively Muslim. It’s not that ISPU had a small sample of non-Muslim Arabs in their data; they had nothing. The lack of any non-Muslim Arabs created a “correlation” between “Arab” and “Muslim”- when all Arabs are Muslim it’s hard to compare it with anything. A high correlation is known to cause inaccurate results in the kind of analysis the authors were doing. Since the computer program does not know what to do, it starts to give you garbage.

It should not be surprising that nobody has yet come up with a way to generate accurate conclusions from data that does not exist. An attempt divine such a conclusion is not math or science, it’s soothsaying.

You don’t need a degree in statistics or understand terms like “suppressor effect” or multicollinearity to understand this kind of game-playing would go disastrously wrong, or exactly right if you were fishing for a specific result.

How to improve validity with invisible data

Rania Awad, the lead author of the JAMA Psychiatry study, responded in her comment stating about race:

[I]ncluding race in the model improves the predictive validity of the model and may provide a more accurate representation of the relationship between religion and suicide attempt. Race is a fundamental control variable that must be considered when studying any suicide epidemiologic study.

She further explains:

We can’t simply remove the variable because Arab Christians were underrepresented in this sample.

Awaad was overly generous to herself here. Arab Christians were not merely “underrepresented” - they did not exist. If race were a “fundamental control variable” when it came to studying Muslim suicide attempts, she should have obtained adequate data on the races she figured were important. Dr. Awaad is attempting to convince us data that is “fundamental” to her work must be incorporated even if it’s invisible to her and the software program she was using.

The JAMA Psychiatry authors mangled the numbers they had to arrive at their desired conclusion. There was no other way to arrive at the author’s conclusion that Muslims are twice as likely to attempt suicide without running these visible and invisible numbers through Dr. Awaad’s gratuitous regression analysis blender.

Certified error-free!

ISPU’s Dalia Mogahed, a credited co-author of the study, publicly claimed after Dr. Umarji’s critique that the authors had three independent biostatisticians review the work. The identity of these biostatisticians appears to be a secret, even from co-authors (including a couple of authors I spoke to who publicly bragged about their supposed existence) and JAMA itself.

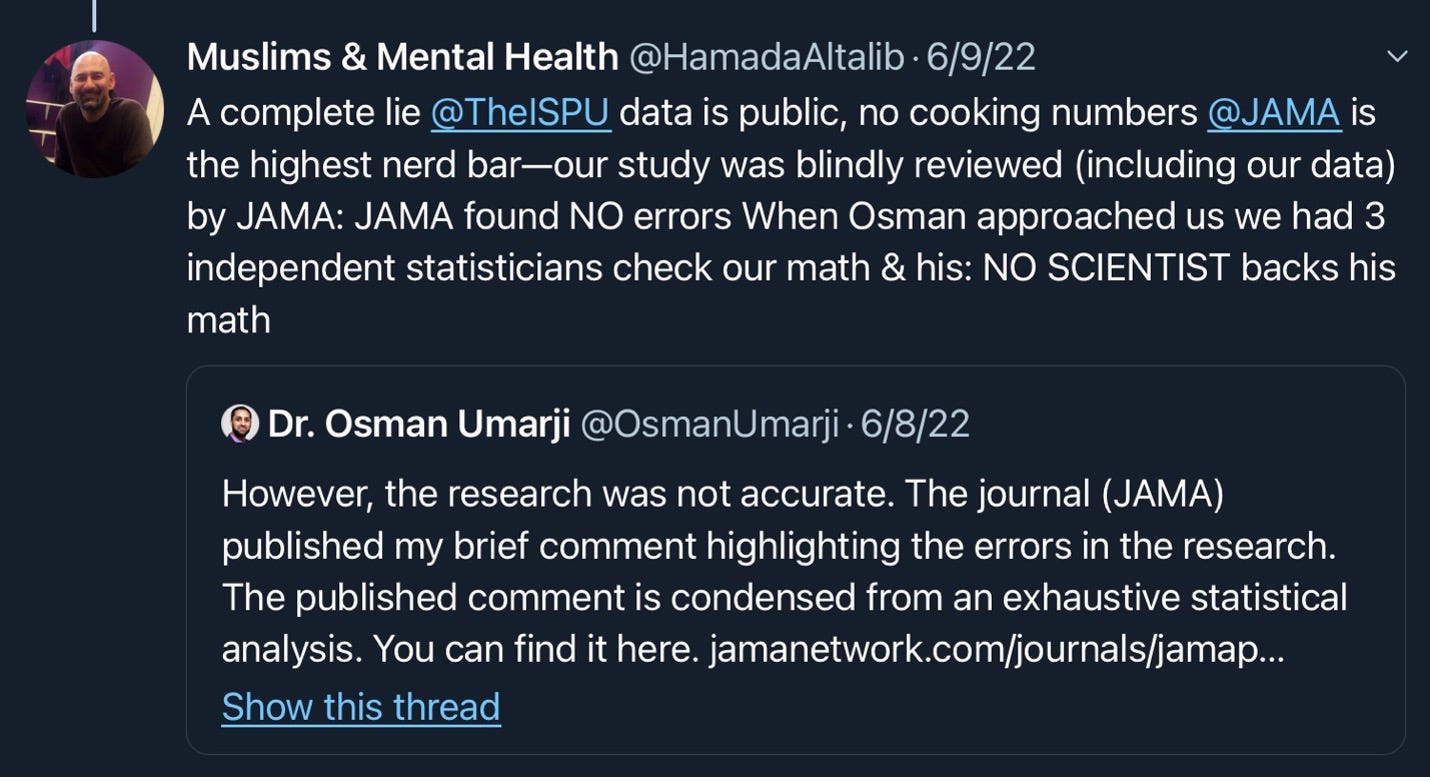

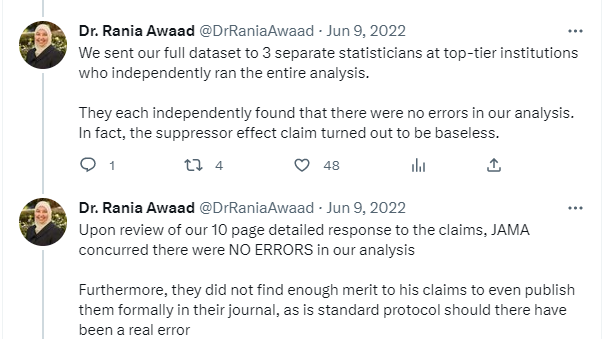

Various authors, including the Lead Author Dr. Rania Awaad, and Senior Author Dr. Hamada Altalib took to Twitter to make similar claims, all the while, accusing Dr. Osman Umarji of disinformation and being a liar.

Here are a few of the claims the co-authors made:

Three separate statisticians at top-tier institutions found no errors, and the suppressor error was baseless.

JAMA found “NO ERRORS” (emphasis in original) in the analysis, and on this basis “denied Dr. Umarji a platform to publish.”

The data was “blindly reviewed” by JAMA.

While the identity of the statisticians is secret, it’s impossible they found the suppressor error “baseless” since the authors themselves did not believe the suppressor error was baseless (I will get to that). I verified from JAMA editors I spoke with that the other two claims made by Dr. Awaad were false.

Dr. Awaad also alluded to a “rebuttal” sent to JAMA, which she did not make public. Fortunately, I obtained a copy, and when you read it, it’s easy to understand why she decided to keep it under wraps.

Agree in secret, attack in public

The problem is the authors themselves, in their “rebuttal” to JAMA Psychiatry, admitted Dr. Umari’s claim (you can read his reanalysis letter to JAMA here) had validity. They did not disclose this to the public or to Dr. Umarji, who they publicly attacked, accused him of spreading disinformation, called him a liar, and even claimed the “suppressor effect” error he described was baseless. The authors themselves privately told JAMA in their response (which I make public in the link):

In this sample, being Arab may be a confounder and serve as a suppressor variable. However, as we argue above it is critical to include race in the model so that readers can see the potential effect. Our intent of publishing this Research Letter is draw attention to an under-recognized issue and promote further discourse on suicide across communities. (emphasis added).

The authors privately agreed the suppressor effect concern was valid. The authors sent this to JAMA before telling the Muslim community on social media the suppressor effect was baseless and that Dr. Umarji was a liar and troll peddling disinformation. Both of those cannot be true at once. Drs. Awaad and Altalib lied to the Muslim community and unjustly attacked Dr. Umarji.

We also learned research letter was not about reality but about “potential effect” and to promote “discourse on suicide.” The authors also claim that the suppressor effect may enhance accuracy, but of course, they have no way of knowing any of this and had no basis for publishing what amounts to a fever dream.

Why would JAMA verify no errors when the author’s response agreed the data on Arabs may serve as a suppressor after all? Of course, JAMA never claimed there were no errors. That’s both ridiculous and explicitly, against JAMA’s terms of use. They do not rerun data, based on my conversation with JAMA editors. Despite Dr. Awaad’s penchant for speaking for JAMA on Twitter, one JAMA editor told me, “Dr. Awaad does not speak for JAMA.”

The incredible shrinking p-value

I previously mentioned Dr. Umarji had offered two criticisms of the JAMA Psychiatry suicide claims. The authors only seemed to secretly concede the second point (on a suppressor effect, while claiming it may still be a good thing somehow). The first was why do a regression analysis on data that means nothing to start with? You can always torture useless data in a myriad of ways to make it seem like whatever you want. The authors disagreed with Umarji by claiming the unadjusted data was actually meaningful. The reason is because of something called “unadjusted odds ratios.” In this case measure of association between two things, suicide, and religion. This was another way of calculating something like a margin of error (a concept most of us non-statisticians are familiar with).

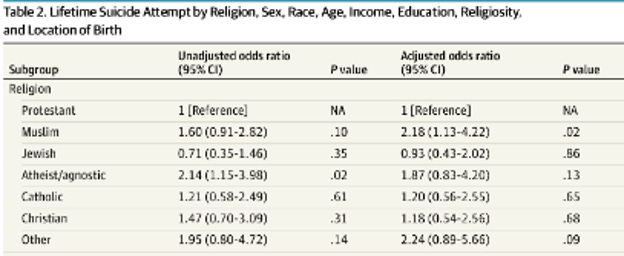

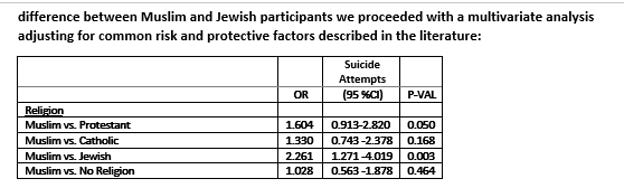

The JAMA Research letter provided an unadjusted odds ratio here; note that since Protestants are the dominant group and the reference, that is the number that mattered to the authors:

The most important number on this chart says that the P-Value for the reference population (Protestants) was 0.10 in the unadjusted odds ratio, which is the same as saying they have nothing or that it is statistically insignificant. In general, a p-value of 0.05 or less is statistically significant (and thus worth talking about). The p-value of 0.10 is more than 0.05 and thus worthless. It’s like showing an opinion poll with a margin of error of infinity in either direction. Who would care?

Both publicly and privately, the authors provided the unadjusted odds ratios again to justify why they would go on their data-Frankensteining expedition with “regression analysis.” How do they do this when we established they had nothing? Well, that’s where things get even more interesting.

Below is the table provided by Dr. Awaad publicly on the JAMA website comment section, rebutting Dr. Umarji’s comment:

The screenshot below is from the private “rebuttal” to JAMA Psychiatry’s editors:

Wiz-bang regression analysis was now justified because the P-value of Muslims vs. Protestants magically changed to 0.050 from 0.1 in the research letter itself, with the same data. What you are looking at with these charts is a change in the p-value, a measure of statistical significance in scientific literature. The authors took the liberty of making it up.

In scientific journals, researchers are sometimes accused of “p-hacking”- which is a process of manipulating data to get a result they can publish. This was not “p-hacking”- the authors simply deleted one number they published in their original JAMA research letter and replaced it with a different number without any new data or math to support it. It was a lie, an especially sloppy one to boot. You don’t need specialized math education to see it.

I notified an author of the doctored p-value several months ago. That co-author felt changing the p-value to defend the letter and attack Dr. Umarji was somehow not lying and cheating but did not explain how. A low P-Value (0.05 or less) is vital for getting things published in scientific journals. In the article, reaching a low p-value for the unadjusted odds ratio was unnecessary since the author’s tortured regression analysis gave them a great p-value with a massive fake difference between Muslim suicide attempt rates and everyone else. So, they kept the unadjusted odds ratio undoctored the first time.

But now that they had to privately admit Dr. Umarji had a point regarding the suppressor effect and correlated variables (data torture), the authors needed to show that they had a reason to do the regression analysis that got them the “potential” effect they wanted to show the world. So, the authors lied by making up a “p-value.”

This conduct should be impossible to defend.

A conflicts of interest bonanza

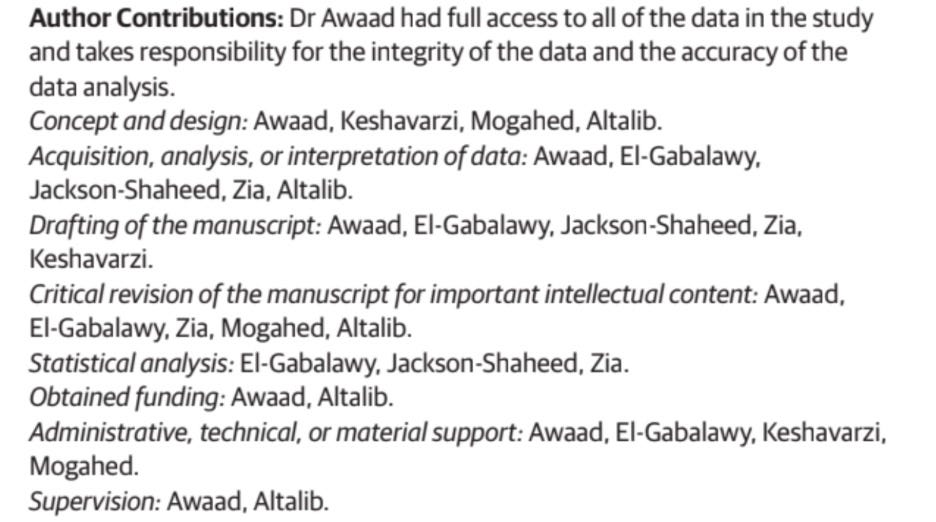

Two authors, Dr. Rania Awaad and Hooman Keshavarzi, failed to disclose conflicts of interest. Both have noted their affiliations with Maristan and Khalil Center in the article, but not in the section on conflicts.

This nondisclosure plainly goes against a principle stated in JAMA editorial about disclosure:

an author who serves or recently has served as an officer of a medical society or advocacy organization who writes about topics that have relevance for that organization also is expected to include that additional information in his or her COI disclosures.

If you have an organization that advocates for the perspective that Muslim mental health is in horrible shape and your organization can help if more people send you money (especially Zakat), that may well color your findings. A Muslim mental health advocacy group is unlikely to herald a study finding Muslims attempt suicide at the same rates as everyone else or that they ran the numbers and have no idea about anything either way (leaving aside the question of who would publish such a thing).

The authors did not treat this letter as some sort of preliminary or tentative finding on Muslim suicide. Dr. Awaad brought in a media foghorn to promote the research letter aggressively. She leveraged the report to promote an alarmist and false claim that Muslims because they are Muslim, are more likely to try to kill themselves. She also fundraised from the JAMA letter.

Dr. Hamada Altalib, a co-author, did disclose his conflict of interests with a Muslim Mental Health advocacy group, though he noted his role in it (as well as his role as editor of a journal) is voluntary, unlike the co-authors who failed to disclose the conflict. Other co-authors disclosed their affiliations though they may not be conflicts at all.

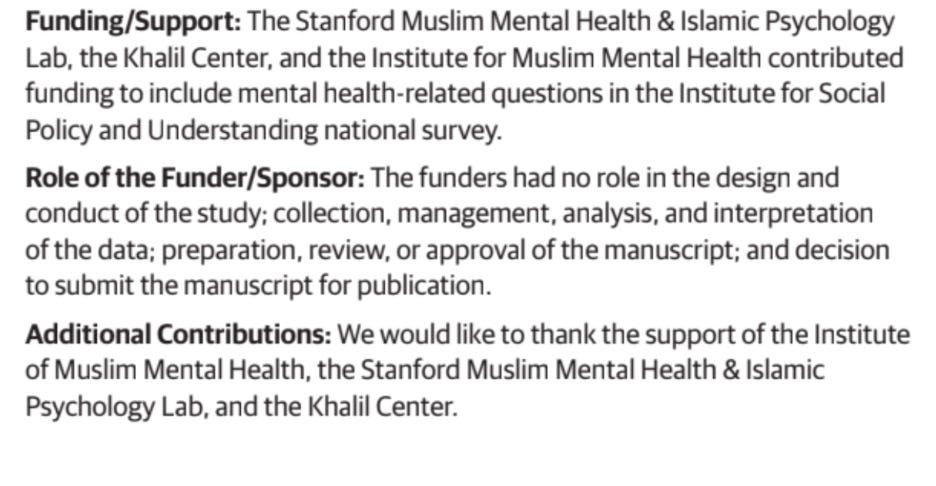

The funders did nothing: The funders did everything

The authors tell us three organizations that funded the study are the Stanford Muslim Health and Islamic Psychology Lab, the Khalil Center, and the Institute for Muslim Mental Health. Dr. Awaad, Dr. Keshavarzi, and Dr. Altalib lead these organizations.

Right below the disclosure on who the funders are, we have a statement on the role of the funders. The authors assure us the funders had no role in the “design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review or approval of the manuscript; and the decision to submit the manuscript for publication.”

Above this helpful disclosure, we get this:

It’s plain that the funders of the study had full control of the study. Awaad, Keshavarzi, and Altalib headed organizations that funded the study- the same organizations we were assured had no role in doing the exact same things the three of them also claim to have done. The authors and funders (who claim to be Zakat-eligible) were the same.

Either there was a check-the-box game going on with the editors and peer reviewers a copy editor should have caught the inconsistency, or there was a mistake in the disclosure.

Either way, it should give pause even for those Muslims who think “peer review” is the mark of excellence and a talisman to hold up asserting what is in those hallowed pages is truth.

Muslims should ignore grifting because we care about mental health

It’s essential to address the following from Dr. Awaad:

To be precise (fact-checking everything Dr. Awaad says would be exhausting), the research letter said nothing about an “increasing” Muslim suicide rate. Like so much of what Dr. Awaad says, she made that up.

Dr. Rania Awaad and the other JAMA Psychiatry paper authors produced a cooked conclusion about American Muslims they could not legitimately support, then lied to support it. There should be no confusion about this.

The authors hyped this lie in the media, and raised money, including zakat from the Muslim public. They took full advantage of conflicts of interest they obviously had but did not bother to disclose. The authors also attacked a Muslim scholar for lying and spreading disinformation when they knew he was telling the truth and privately admitted as much. They then brazenly doctored the results of a p-value to make themselves look good, right in plain sight.

Muslims in the United States need to know about this grift, even if it means the public will have less confidence in Muslim mental health professionals.

Who has “stigma”?

The term “stigma” comes from Greek, it’s a physical mark or brand that comes as a result of disgrace, historically because of things like lying and cheating. The ethical history of the mental health profession is a waking nightmare, mainly because it has had an overabundance of people who cut corners while residing in a moral wasteland where almost anything is okay. If there is a stigma around mental health care, it’s with the mental health profession and not ordinary Muslims. Stigma has come to a sector that helped the US government torture Muslims and participated in countering violent extremism, the repressed memory movement, and other horrors that extend throughout the sector’s history. This contrived Muslim suicide saga is just another chapter in an ongoing story.

Muslim mental health providers can be beneficial

Muslim mental health professionals can be a benefit to humanity and move us away from the stigma caused by the mental health sector’s sordid history. To do this, they need to move beyond being a den of Zakat-mooching fabulists, national security contractors, and ethically challenged hucksters on Islamic convention stages and Imam trainings on Muslim Suicide.

O Allah, show me the truth as truth and guide me to follow it. Show me the false as false and guide me to avoid it.

Please feel free to comment, especially if you feel I was unclear on something. I will do my best to respond to any questions.

This erodes the public trust. Its extremely concerning that when caught in their lie, they double down and say "well there is still mental health problems." No one is denying there are issues of mental health. The problem is that you lied and doctored your studies like the many corrupt companies before you.

Great work Ahmed, keep it up inshaAllah. JazakAllah khair